SwagScanner

One of the only homegrown 3D scanners you will find on the internet.

Background

Github

- Python: https://github.com/seanngpack/swag-scanner

- C++: https://github.com/seanngpack/swag-scanner-cpp

About

SwagScanner is a 3D scanning system (in active development) that scans an object into cyberspace. The user places an object on the rotating bed, then the object is scanned at a constant interval for a full rotation. The data goes through an advanced processing pipeline and outputs a refined pointcloud. Swag Scanner has two codebases: one in Python(inactive) and one in C++. This page serves as documentation of current development and information about how the scanner works.

Features

Software

High performance codebases in C++ and Python

Extensible camera interface allows use of any depth camera

Super fast depth deprojection

Saves pointclouds to files \

Hardware

Elegant, integrated design

Simple bottom-up assembly

Self-locking gearbox

Rotating bed can withstand high axial & radial loads

Electronics

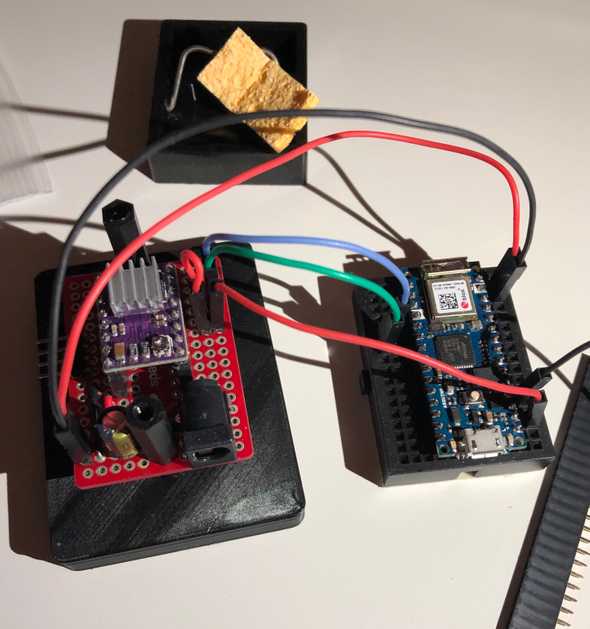

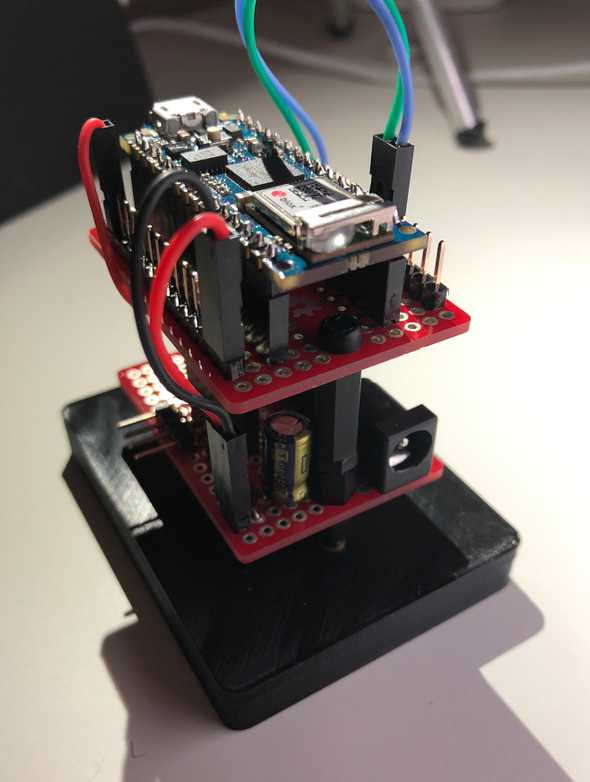

Custom vertical board design

Easy hotswapping of motor driver and arduino boards

Minimized number of cables and cable lengths

Initial design process

I took inspiration from existing devices and sketched several different designs of the hardware architecture of the scanner. One of the main hardware decisions is whether I wanted the scanner have a camera revolve around an object, or have the object rotate. I chose the latter because that approach seemed to result in high accuracy scans in addition to being much more feasible to create. Then I narrowed in to more of the specifics of the scanner, I wanted it to look aesthetic, have minimal cables, and support small-medium sized objects. I achieved these design objectives by creating a modular scanner design where the distance between the scanning bed and camera can be adjusted both in height and length and the cables are hidden in this mechanism. I created some basic dimensions for my sketch and begun ordering metal hardware. Then I sketched and planned the electronics layout to fit inside my mechanical housing and ordered those parts soonafter. I wanted the electronics to be robust and repairable so I created my own stacked board design where the Arduino and motor driver can be hotswapped without soldering. As those parts were arriving, I hopped onto Fusion360 and CADed up my design to be 3D printed. As an additional challenge, I only used my trackpad to do the CAD. I took care in designing keep-out regions where the electronics were to be housed so heat buildup and other part interference would be mitigated. I also went through many iterations to make the assembly of the parts extremely easy, which was one of the hardest parts of the build because I had to work through building and designing the hardware backwards and forwards, anticipating pain points. Getting tolerances for fitting 3D printed parts was pretty easy as I have a lot of experience in 3D printed designs for my past personal projects and during my co-op at Speck. As I was wrapping up CAD design, I 3D printed the parts and started coding the brains of the project. I had to bust out my linear algrebra textbooks again to understand better how to program the scanner. I chose Python as the language because of its ease of use. I sketched up the architecture of my program and implemented it quickly before I had to leave California to go back to Boston. I managed to come up with a working prototype and even got to show it off at JPL for my final presentation!

Scanning Pipeline

In this section I include visuals and brief explanations on how the calibration, scanning, and processing pipelines work. This section is continuously updated as the pipeline changes.

More details

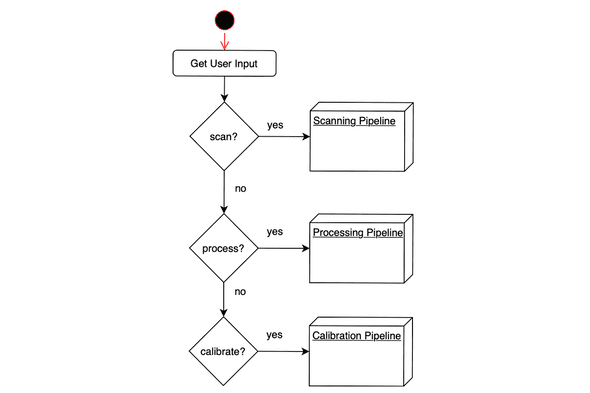

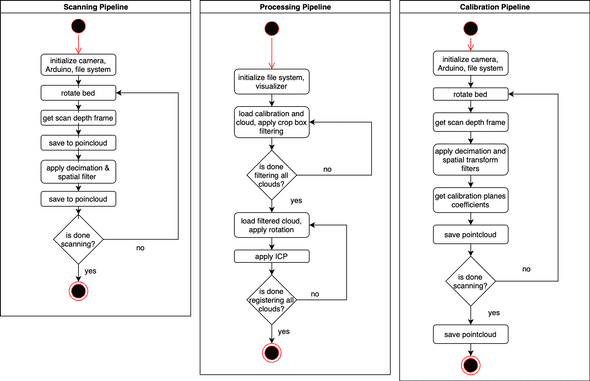

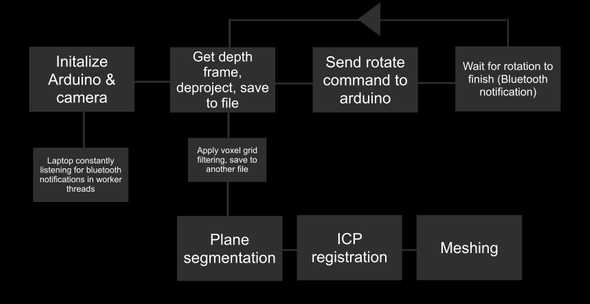

The diagrams shown in this section are very high-level overviews of the flow of the program. Low-level diagram is WIP. The diagrams show sequential actions, cuncurrency processing is detailed in other sections. The image below shows how user input is used to select the appropriate pipeline to use.

The image below shows how scanning, processing, and calibration work.

Calibration

This section details the method I used to calculate the center of the rotation table and how it is used to perform initial registration and point removal. If you like linear algebra, you’re in for a treat!

warning: lots of math

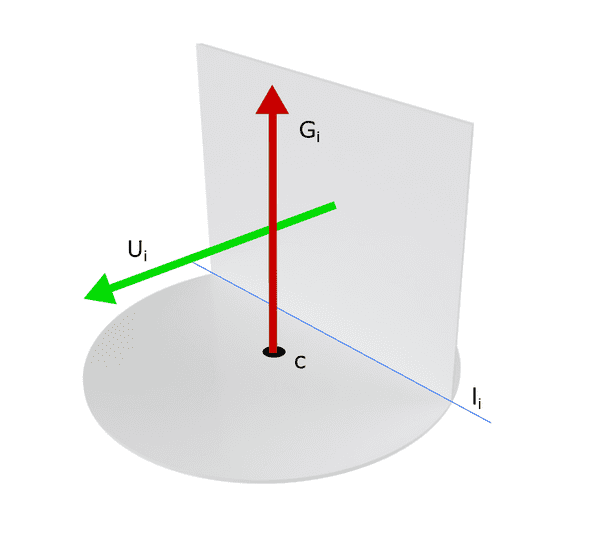

Calibration fixture

Here is the physical calibration fixture. It has a upright plane and ground plane. This design is inspired by the calibration fixture used on the 3D scanner I worked on at JPL. represents the ground plane normal, the upright plane normal, the center point, and the line of intersection between the ground and upright planes.

Calculating axis of rotation

The axis of rotation is the normal direction vector of the ground plane, . Using RANSAC plane segmentation, and giving plane detection an initial guess and epsilon value for the margin of error of the guess, the equation of the ground plane can be extracted. Multiple scans are taken the final rotation axis is calculated by taking the average of the normals.

Obtaining upright plane equations

After gathering a ground plane equation, it is trivial to gather an upright plane. Knowing that the upright plane is orthogonal to the ground plane, we can give the RANSAC plane detection function an axis perpendicular to the ground plane normal.

Calculating center point

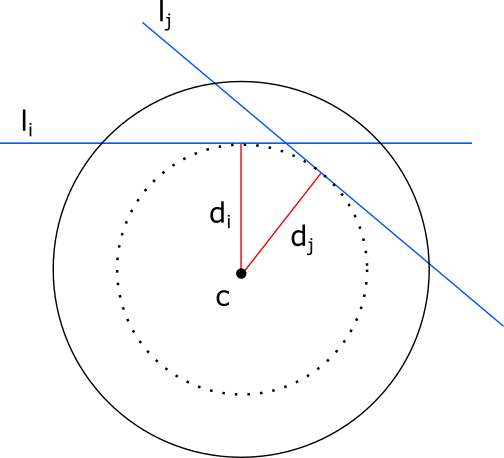

The distance between the point and line is the same for each scan. Knowing this geometric relation, we can derive equations to calculate for this distance and ultimately solve for .

First we start with some definitions:

We calculate the line of intersection below:

Now we get the line where P is a point on :

We can now calculate the area of the parallelogram by taking the norm of the cross product of and the direction of :

At this point we can calculate by taking the area of the parallelogram and dividing it by the base of the shape , or norm of over the norm of the direction of :

The symbolic solution is very complex, so here is an elegant solution derived by the Yuping Ye and Zhan Song of this paper using a similar method:

Mentioned earlier, we know that should be the same for each iteration so:

And we can write out the matrix form as:

You still here? We’re almost done! We know and so our only unknowns are in the matrix. If we take more than three scans we get an overdetermined system—more equations than unknowns. We can find the approximate solution of an overdetermined solution using a least sqaures method. Using MATLAB’s linear least squares method lsqr and Eigen’s bdcsvd method return the same results.

Aligning point cloud to world coordinate

Okay, so we have the axis of rotation and center point now. This is exactly what we need to transform a scanned pointcloud to the world origin coordinate frame. Why do we care about orienting a cloud? Aligning a pointcloud to the world frame is useful for several reasons. First, it simplies the process of applying a rigid rotation. Second, it makes understand the raw data in the points more intuitive because the reference point is (0,0,0). In addition, it simplifies defining the dimensions of a box filter. Doing this transformation is easy, just perform a rigid translation to the camera frame, then align the z-axis and we’re done.

We know that the center coordinate is a rigid transform from the camera frame (0,0,0) to the point . We multiply the transform by -1 to get the transform from to camera and compose it as a 4x4 translation matrix.

Next, we want get the angle between the axis of rotation and camera z-axis, . Getting this angle allows us to know the rotation to make the z-axis point upwards in the final cloud. The angle between the normalized axis of rotation and camera z-axis is their dot product:

Sweet, we know . To align the axis of rotation to the camera z, we have to perform the rotation about the x axis. Let’s construct the 4x4 rotation matrix:

And now we create an affine transformation matrix by applying the rotation onto the translation. We want to translate first, and then rotate:

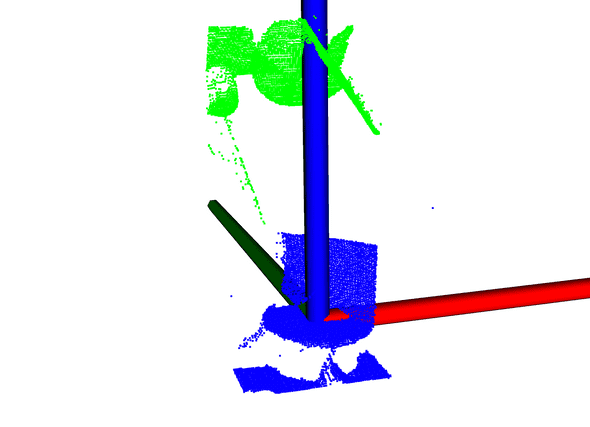

At this point we can use the result onto our pointcloud and align it to the world origin with z pointing up! The image below shows the original cloud in green, and the transformed cloud in blue.

Refinement

Sometimes the algorithm places the center point above or below where the ground plane lies. In order to refine this point and place it as close to the surface as possible, we can perform a point-to-plane projection of the calculated center point.

Automatic point removal

After aligning the pointcloud to the world origin, we can define a crop box where points outside of this box get eliminated. The box is easily constructed because we know the center point (0,0,0), so any distance added to that point defines the boundary of the box.

Software Design

SwagScanner’s codebase is quickly growing with a multitude of features being added.

C++ Program Design

* This section is still WIP *

High level architecture

I utilized MVC (model-view-controller) pattern to organize the project structure. I chose this pattern for clear separation of concerns.

Model

The models are represented by the data handling objects which include the Arduino, Camera and main Model classes. These model objects are managed by the controllers.

Controller

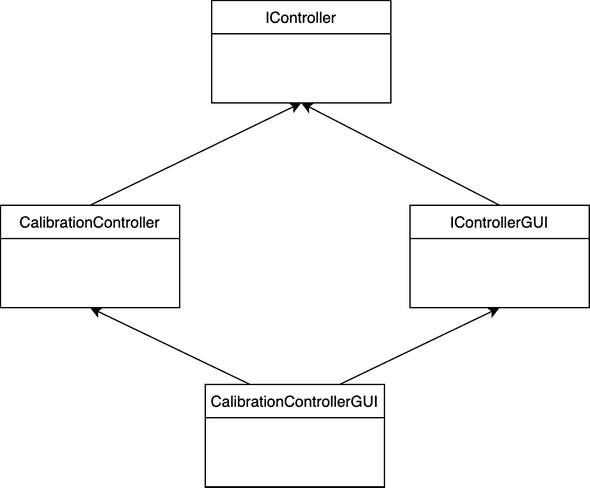

Controllers handle the the logic for the scanning, calibration, and processing pipelines in addition to connecting the models to the views. I created an IController abstract base class which is functionally equivalent to a Java abstract class. This base class contains pure virtual methods implemented by specialized controllers such as CalibrationController. This way, I can store specialized controllers as its abstract type, IController, and simply call run() to run the controller and perform their specialized task. Later, when I added a GUI, I did not have to refactor the original implementations of IController and its children. I accomplished this through multiple inheritance. First, I made a IControllerGUI abstract base class that inherited IController. Then, its specialized child class inherits IControllerGUI and a child class of IController. The diagram below illustrates the multiple inheritance pattern:

This diamond shape is common in multiple inheritance situations and can lead to errors. CalibrationGUI would have two instances of IController from CalibrationController and IControllerGUI. This means that calls to methods that are defined in both CalibrationController and IControllerGUI would be ambigious because there are two methods you could call, but you don’t know which one to use. This can be solved by having CalibrationController and IControllerGUI virtually inheriting IController.

Multiple inheritance can be tricky, but I think it makes sense in my use case. The derived classes of IController are used by the CLI program, and IControllerGUI children code only introduce a little bit of code to interact with the GUI, so it saves a lot of code repetition by reusing code defined in IController’s children.

View

Swag Scanner has a couple different views: a CLI view, GUI view, and PCL visualization view. The view is managed by the controller and the controller updates the view with data. The GUI view is a bit more complex. I built it with Qt which follows its own model-view paradigm, which merges the responsibilities of the view and the controller and stores the data in QModel. Using Qt is really weird, they utilize their own Meta Object Compiler to achieve functionality such as signals and slots. You are also relegated to using raw pointers when instantiation objects, but there is no need to call delete on them as they are deallocated automatically by their parent through some black magic. I decided opt out of using their model class and instead, enforce my MVC design by treating the Qt interface strictly as a view. User actions/data is passed from the view to the controller and back through a system of signals and slots. This creates a circular dependency because the view must hold a reference to the controller, and the controller to the view. To solve this issue, I created a setter method in the view to store a reference to the controller.

Dynamic controller switching and caching

Because there are several specialized controllers, the view needs access to them for performing different actions. It is very expensive to keep initializing and destroying controllers and their parameter objects, so I created a caching system to handle this. The top level contains a factory which returns a controller. If the controller does not exist in the cache, then create a new instance of it then store it. If the controller exists in the cache, then just return a reference to it. The caching system prealloctes the most often used controllers so this overhead is experienced at program launch instead of during usage.

File handling

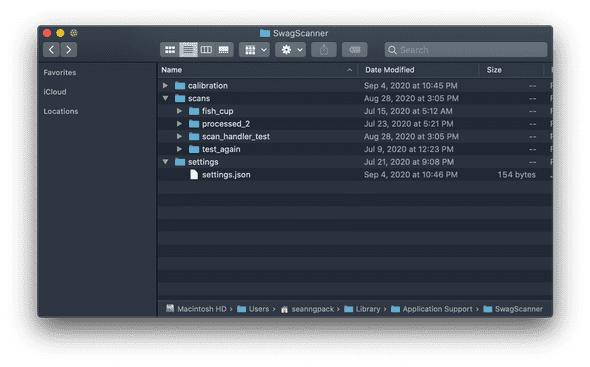

I wrote a custom file handling system to manage SwagScanner’s settings and manage scanning data. When Swag Scanner is loaded for the first time, it will create its system folder in the user’s /Application Support directory, which is where other MacOS applications data live. The picture below is structure of Swag Scanner’s system folder.

The file handler system supports many features. It can automatically create new scan folders with auto-incremented names and dynamically update settings.

Testing

I utilized Google Tests and am in processing of increasing code coverage.

Random CMake thoughts

At first, I hated CMake—but that’s probably because I didn’t really understand what was going on. Now that I understand it a bit better after sloging through its documentation, I have gained a new appreciation for it. Swag Scanner has a CMakeLists.txt file in each of its subdirectories because it allows me in the future to completely control which parts of the system I want to build. Building this project from scratch takes forever, so having the option to opt out of building certain subsystems which you won’t use is nice.

Also, I haven’t seen many people apply this technique—I am compiling Swag Scanner as a static library. Then I am linking it to the main run executable in addition to linking it to the main executable of my unit tests. This is a complete game changer because it means I do not have to recompile core files twice to do unit testing!

Python Program Design (old)

Entry Point

First, we define the entry point of the application scan.py and create a Scan() object to handle abstracting each major steps in the scanning pipeline to be run sequentially (note: not all actions are synchronous in SwagScanner!)

Camera()

The Camera() class is an interface that can be extended to provide ability to use any depth camera. Looking at the D435 object, we override the get_intrinsics() method with RealSense API calls to get the intrinsics of the camera.

Arduino()

The Arduino() class provides methods to initialize the Arduino and send bluetooth commands to it. We subscribe to asynchronous notifications from a custom bluetooth service which provides table state information.

DepthProcessor()

This class is a class factory builder that takes in a Camera() object and a boolean flag and returns either a fast or slow depth processing unit. Using the fast unit, we gain the ability to use deproject_depth_frame() with vectorized math operations for point to pixel deprojection. The slow unit utilizes a much (300x) slower double for loop to perform that task. One drawback with the fast deprojection method is that it does not account for any distortion models in the frame. If you are using Intel depth cameras that is OK because the developers advised against that since distortion is so low. The same may not be true for the Kinect however. Subclass the DepthProcessor() object and override the deproject_depth_frame() method if you would like to include your own distortion model.

Filtering()

This provides the tools to perform voxel grid filtering which downsamples our pointcloud by the leaf_size parameter and saves it. This step is essential for registration because performing registration on a massive pointcloud would take a very long time to converge. One more thing we have to do in filtering is segment the plane from each pointcloud. We run the RANSAC (random sample concensus) algorithm and fit a plane model (ax + by + cz + d= 0) to our cloud and detect the inliers. Using the inliers and plane model, we can reject those points and obtain a pointcloud without a the scanning bed plane. This is essential to do before registration so that we don’t take a subset of the cloud belonging to the plane and encounter a false-positive icp convergence.

Registration()

The Registration() class provides the tools to iteratively register pairs of clouds. Using global iterative registration, we define a global_transform variable as the identity matrix of size 4x4. Then we apply the iterative closest point algorithm to a a source, target cloud pair and get the source -> target cloud transformation as a 4x4 transformation matrix. Then we take the inverse of that matrix transf_inv to get the transformation from target->source. We multiply the target by the global transform (remember: this is the first iteration, the global_transform is still the identity matrix) to get the target cloud in the same reference frame as the source and save the cloud. Then we dot product global_transform and transf_inv to update the global transformation. Move on to the next pair of clouds and repeat.

Bluetooth & Concurrency

I wrote a library to handle bluetooth functionality. Check it out: github link. The bluetooth library uses semaphores and callbacks to control the program flow. In Swag Scanner, I use a simple mutex and conditional variable in the arduino’s rotate() method which blocks the calling thread until the arduino sends a notification that the table has stopped rotating.

As noted in the GUI section, Qt is required to be run on the main thread so processing and scanning commands are dispatched on different threads. This allows the GUI to remain responsive while actions are happening.

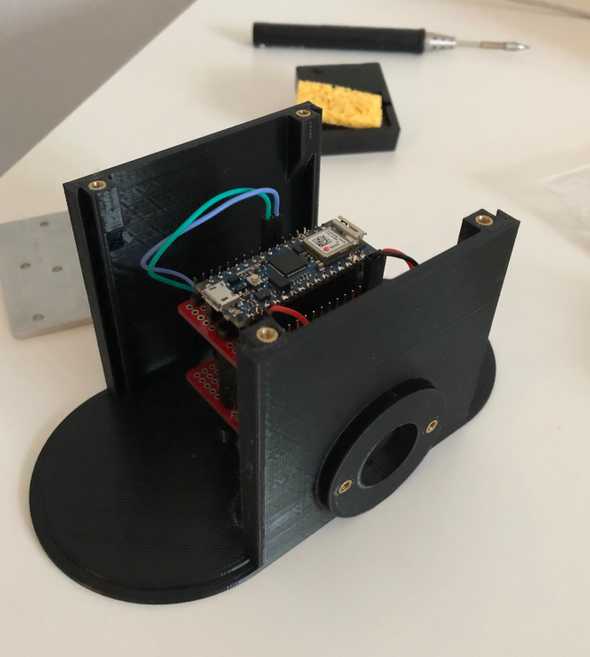

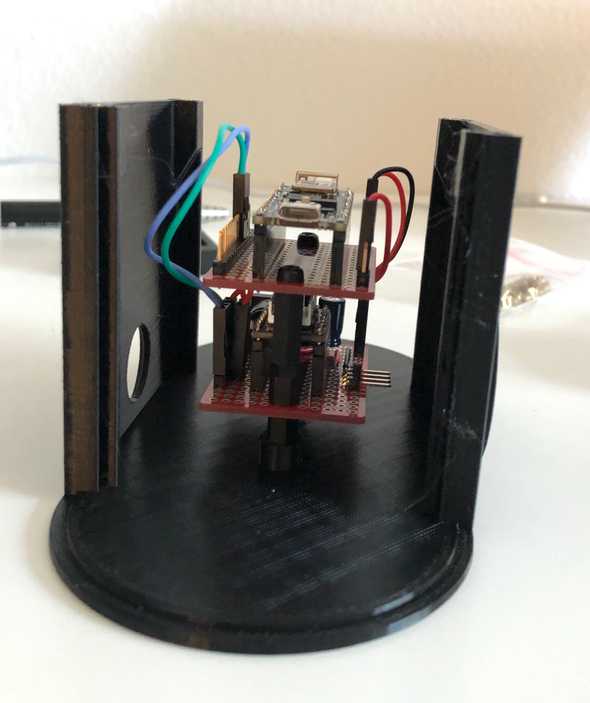

Hardware Design

This section details the design I process I went through to create the hardware. The main idea is that initially I wanted a semi-modular platform that I could tweak and change parameters as I learned more about scanning. SwagScanner is currently undergoing a complete hardware revamp.

Hardware design

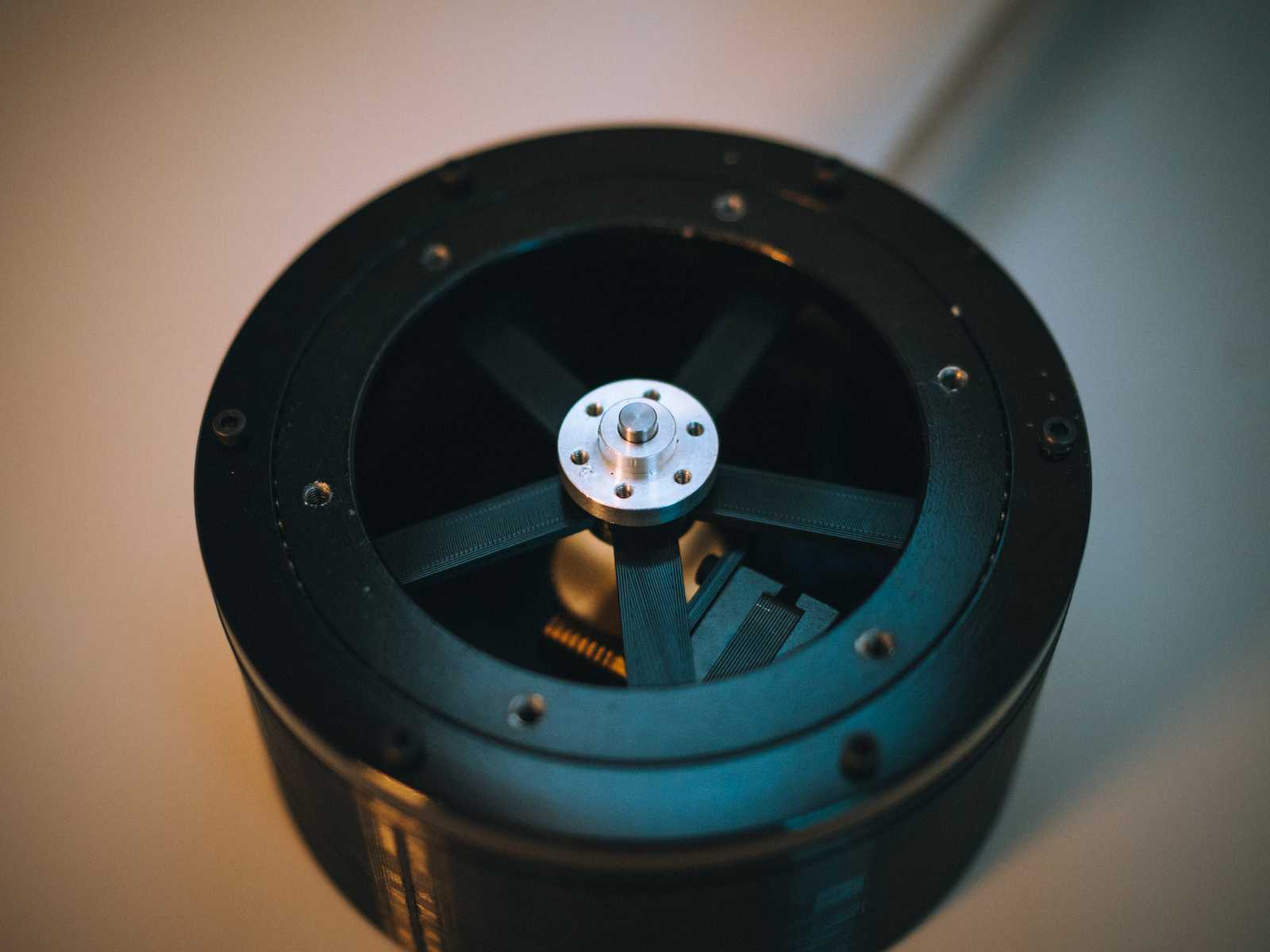

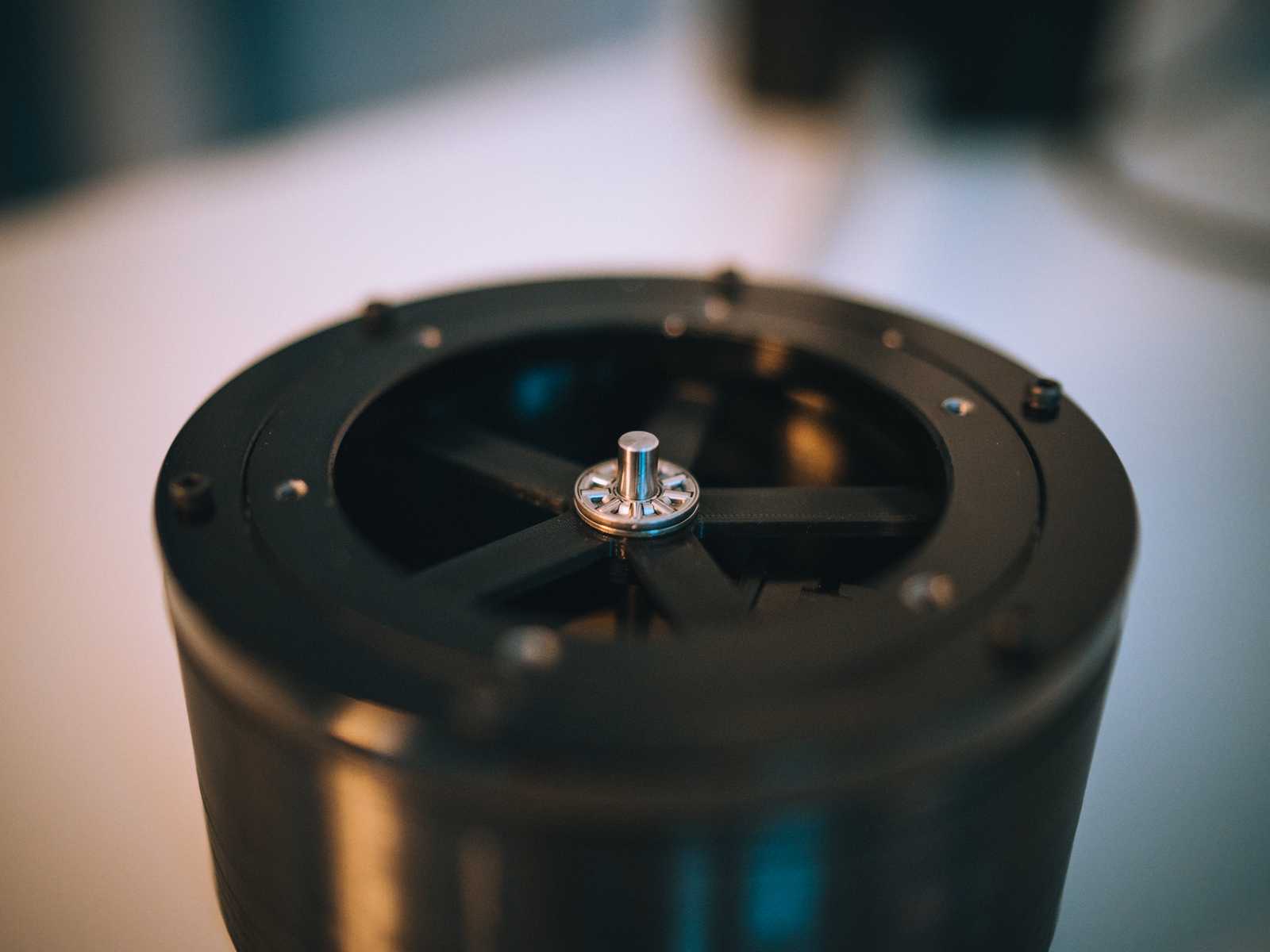

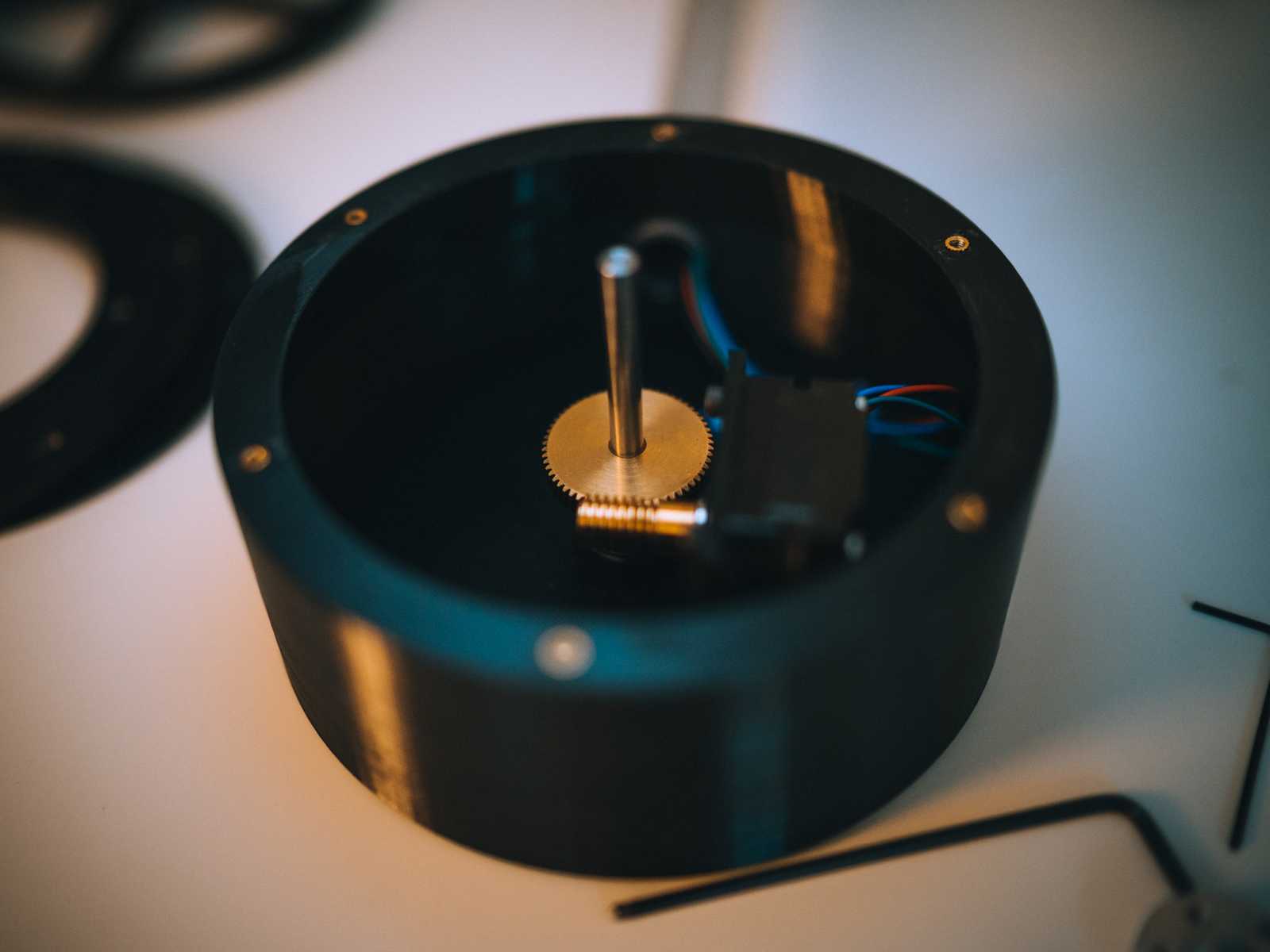

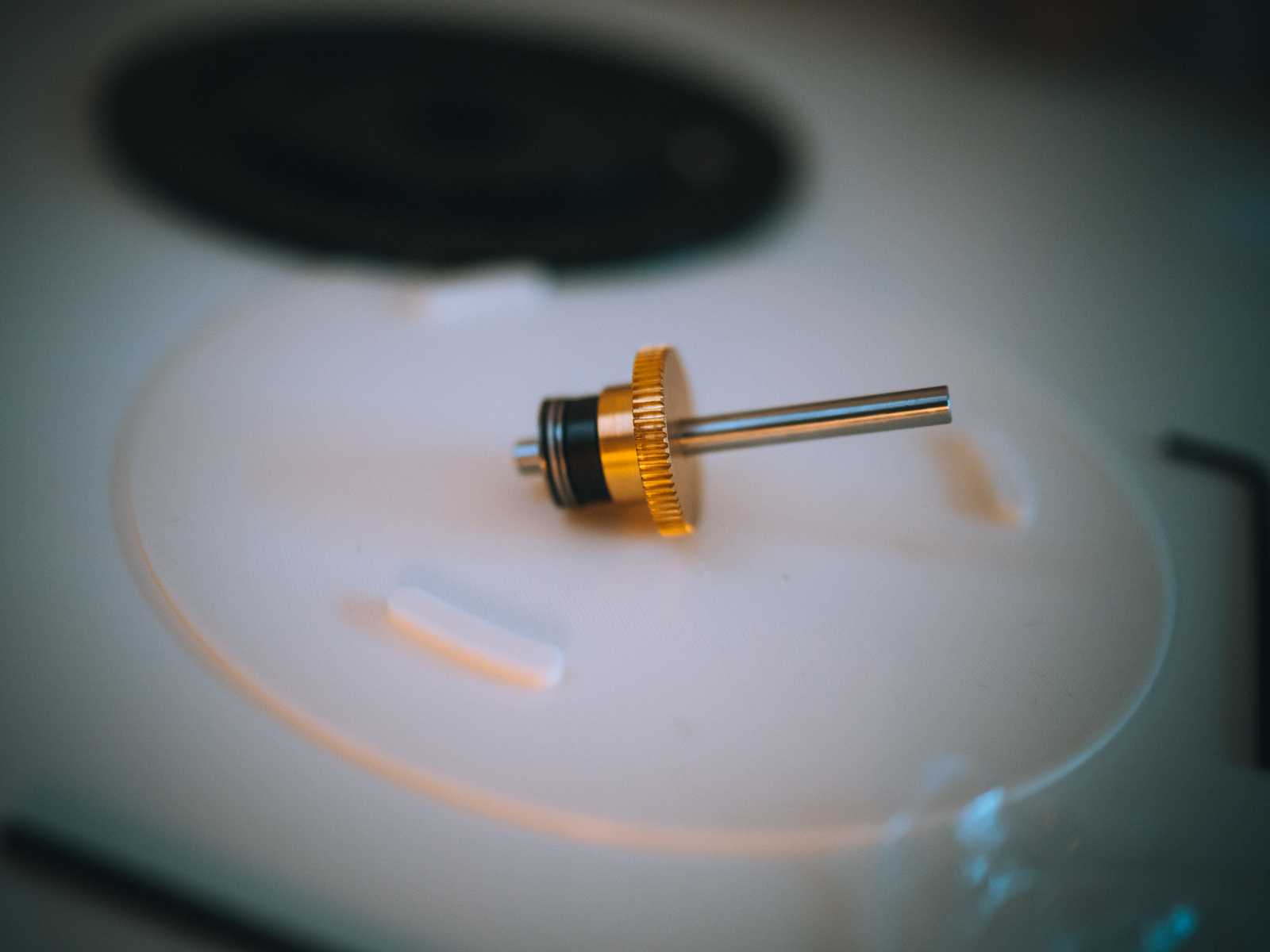

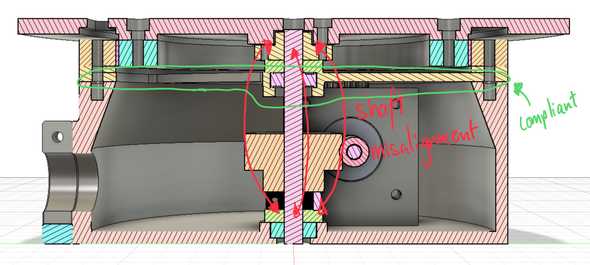

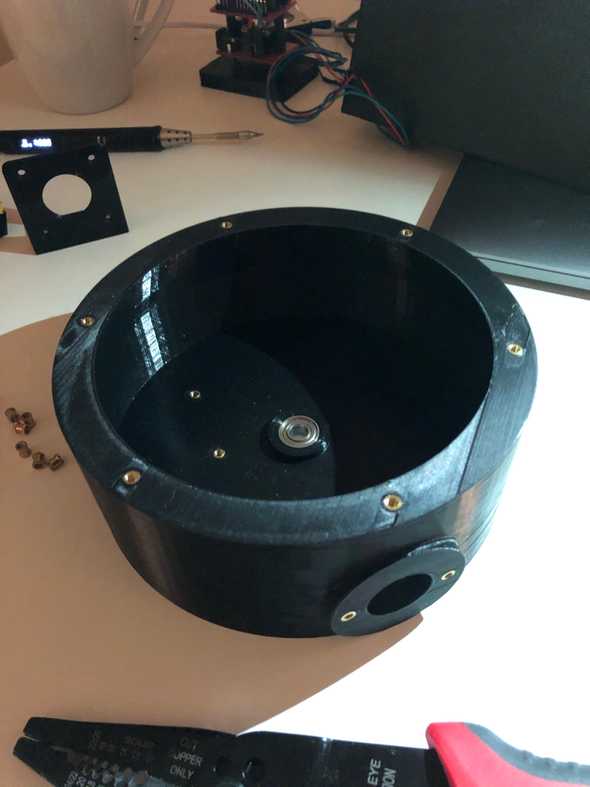

One of the main focuses of the hardware design was the ease of assembly, repairability, and upgradeability. I went with a worm drive gearbox for the rotating bed because of its inherit ability to resist backdriving. The driven gear is connected to a stainless steel shaft. The gear and mounting hub are secured to the shaft via set screws. I hate set screws with a passion—they always come undone and end up scoring your shaft. To alleviate the woes of set screws, I reduced the vertical forces acting on them by designing the hardware stackup along the shaft so that the set screw components rest on axial thrust bearings. That way, at least the weight of the set screw components won’t act on the set screws. Because of 3D printing tolerances, there may be shaft misalignment in addition to misalignment between the gears due to the stepper motor mount. I mitigated this issue by designing the floating brace to be slightly compliant.

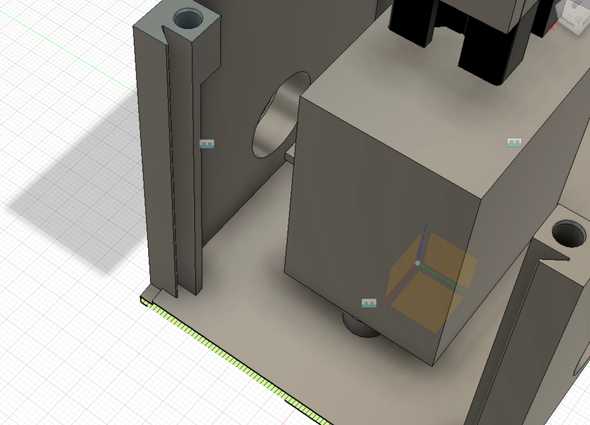

Designing the turntable assembly to be assembled from the bottom-up in an intuitive way proved to be extremely challenging. I had many factors to considering including 3D printability, wall thicknesses to mask screw heads, structural integrity, and overall component-to-component interaction. I also optimized the design of each component to standardize fastener sizes.

I envisioned the electronics housing to have removable sides for easy access to the electronics for debugging. I designed a self-aligning sliding profile to resist motion in all axii except the Z (up and down).

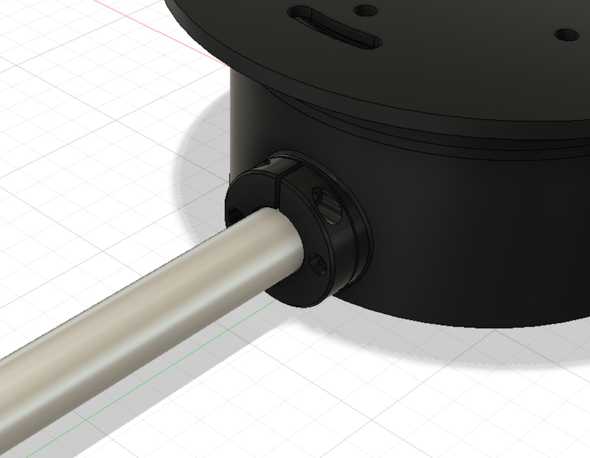

The aluminum pipe bridging the electronics housing and turntable is secured through friction on both ends.

Overall, I think assembly is pretty easy—check out some photos of the build process.

Electronics Design

For the electronics, I went with a stacked board design to save horizontal space for additional components I may add in the future. Hotswaping components is also very straightforward in the case that anything blows up. I am powering the Arduino and stepper driver using a 12V 2a wall adapter. I did not add a voltage regulator such as a LM317 (cheap linear regulator) or a switching regulator to my Arduino. This is because my Arduino iot33 comes with a MPM3610 which its spec sheets indicates to be a large upgrade compared to the voltage regulator supplied in normal Arduinos. I also opted to use Dupont connectors instead of more secure JST connectors because I like the ease of cable removal with the Dupont connectors whereas I find JST connector to get stuck often.

In the back you can see my TS80 soldering iron. It is worth the hype!

Results

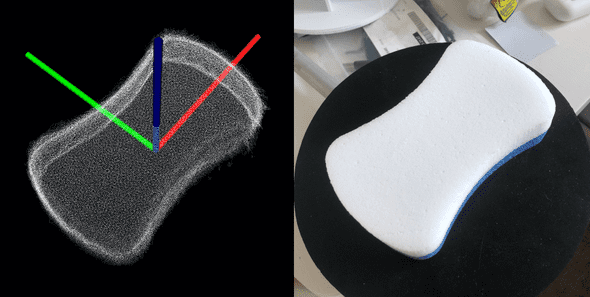

Below is an image of a sponge with ~480,000 points scanned at 10 deg intervals.

The scanner does a good job at capturing the curvature of the model and even the nuanced taper along the z-axis (blue) from the white portion of the sponge to the blue portion. You can see the edges of the cloud are not as sharp as the real-life model due to rounding caused by the bilateral filter. At the expensive of surface smoothing, edges can be sharpened. There is some point overshoot around the perimeter. This can be reduced by further tuning of camera parameters in addition to another pass of outlier removal. Overall, the current quality of SwagScanner’s results is very high compared to previous software versions. With each scanning pipeline revision, there has been a massive leap in quality, and I expect the next major iteration to have significantly better results following parameter tuning and additional filtering.

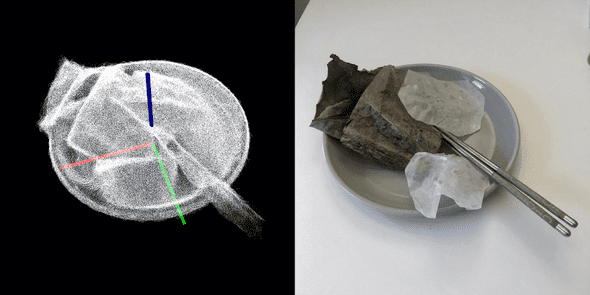

Now let’s try something a bit more complex:

I scanned a plate containing green leaves, two opaque pieces of paper, and two chopsticks. For this, I used 40 scans at 9 degree intervals resulting in a cloud with 1.1mil points. The scanner does a good job at representing the round geoemtry of the plate in addition to capturing the folds and overhangs of the leaves and paper. However, the chopsticks almost meld into one unit because they are shiny and scatter the dot projection. The plate ridge is also a little bit thicker in the pointcloud than in real-life, I think this also has to do with the reflective nature of the plate. With complex multi-part geometries, you can see the disadvantages of a cloud-only scan. Had this scan include a texture map from an RGB image, you would be able to differentiate the clear paper from the green leaves.

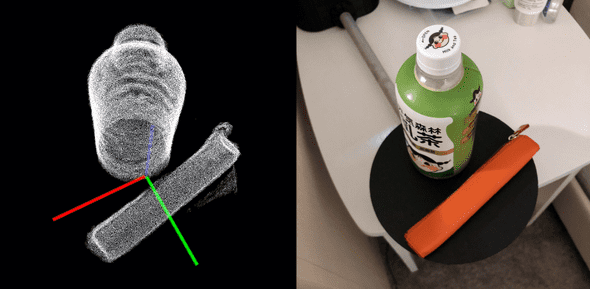

Okay, what if you scan something off axis? That sounds too easy, let’s make it harder and scan TWO objects that are off axis:

Above is a 730,000 point scan of a bottle of matcha and a pencil case. Even though the objects are placed off-axis on the scanning table, they are registered properly. You can see a blob of noise at the bottom of the pencil case that my outlier filter didn’t quite pick up. The scanner does a good job of capturing the shape of both objects and the curvature of the pencil case appears accurate. Although the pointcloud makes the pencil case look bigger than its real-life counterpart, that is just the field of view of the iphone camera playing tricks on you. The iphone camera has a higher field of view than the pointcloud visualizer so the case looks smaller on the iphone image on the right.

Improving results

Work in progress!

Physical noise reduction

Depth data collected by the intel Realsense cameras are very noisy compared to data from the Kinect. In the pointcloud below taken by the SR305, you can see the noise represented by the wavy pattern. I can mitigate noise in two days, first using physical means, and second with post-processing. Because SwagScanner can support multiple cameras, it would be easier to generalize noise-reduction. All depth cameras generate more noise as the distance increases (the ratio between noise to distance varies camera to camera though), so I designed the distance between the camera and scanning object to be at the minimum scanning distance for the set of sensors. I also outline constraints for the user such as using the scanner indoors with minimal reflective surfaces in the room.

Post-processing noise reduction

I have found very good results applying a spatial-edge preserving filter to smooth noise from the Realsense cameras. This filter runs very fast and smoothens the data while maintaining edges. I used the filter provided by librealsense SDK. One parameter it takes is the smooth alpha which affects how aggressive the filter is. The lower the value, the more aggressive the filter and more rounded the edges become.

Other methods of noies reduction

Other methods of noise reduction would add more complexity to the system than needed. Outlined in intel’s paper for tuning Realsense cameras, it is possible to use an external project to increase depth quality, among several other methods.

Noise from scanning bed

Originally I was using a white scanning surface. It was very easy to detect its plane and remove it from the cloud at the end. However, it seems like a combination of it reflectiveness and slightly glossy surface was introducing noise to the bottom of the scans. In the photo below you can see a rounded corner between the bed and the object.

Lessons learned

Lessons Learned So Far

On a high level, working on this project reinforced my ability to understand and bounce between high-level and low-level subsystems both on the hardware and software side. In this section, I will outline lessons learned in bullet format hoping that people can learn from my mistakes at a glance instead of reading a wall of text.